Photo by Mike Swigunski on Unsplash

An approach for deploying simple File Integrity Monitoring (FIM) using Sumo Logic

(for Linux)

Introduction

Although Sumo Logic doesn't offer a pre-packaged FIM solution, it's now feasible to establish a basic FIM by utilizing Sumo Logic's Script Source feature.

With the help of some custom bash scripts, Sumo Logic can monitor file states (including md5 or SHA1 checksums, and attributes) and identify alterations to those states over time.

The changes detected are saved to a Sumo Logic index, which keeps track of these events. By utilizing the contents of this index, users can generate alerts, visualizations, and reports.

This approach creates a Script Source within Sumo Logic Collectors, which will run a bash script to monitor a list of files and directories for changes (FIM Compliance).

If any changes are detected, it will log them into the Sumo Logic platform.

Constraints

Since this is not a pre-built solution and relies on customized scripts to generate File Integrity Information for tracking purposes, these scripts are simply provided as examples 'as is'.

Determining which files should be monitored it's up to the deployer. You can also follow FIM best practices.

This custom implementation keeps track of file hashes and attributes. It records if a file has been changed, and describes what specific changes have occurred within the file. If you want to go deeper, that is something that you will need to investigate separately.

Yes. This is a bare-bones/workaround FIM solution for Sumo Logic.

Deployment

Configuring a 'Script Source' on the hosts you wish to monitor

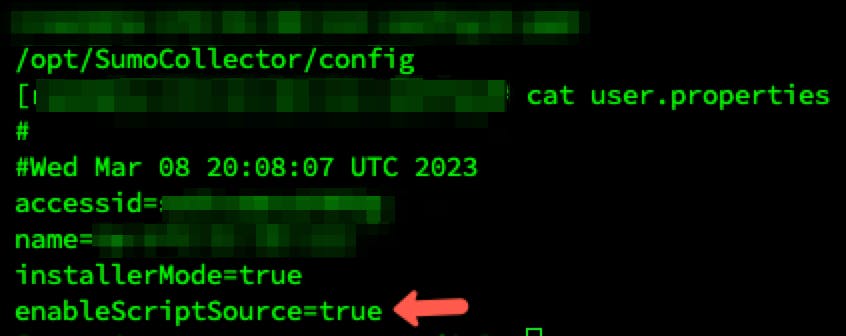

The Sumo Collector agent does not allow using Script Source by default unless adding enableScriptSource into the user.properties file located in <SUMOCOLLECTOR_PATH>/config

To begin monitoring, we need to install a Sumo Logic Collector onto the machine(s) we want to track and set up the Script Source like the following bash script example:

#!/bin/bash

#List of files and directories to monitor

FILES=(/etc/hosts /etc/passwd /etc/shadow)

#Location to store checksums

CHECKSUM_DIR=/var/log/fim

#Create a directory for checksums if not exists

if [ ! -d $CHECKSUM_DIR ]; then

mkdir -p $CHECKSUM_DIR

fi

#Loop through files and directories and calculate checksums

for file in "${FILES[@]}"

do

#Get the file basename

basename=$(basename $file)

#Calculate the checksum of the file

checksum=$(sha256sum $file)

#Create a file containing the checksum for the file

echo "$checksum $basename" > $CHECKSUM_DIR/$basename.sha256

done

#Compare current checksums to previous checksums

for file in "${FILES[@]}"

do

#Get the file basename

basename=$(basename $file)

#Calculate the current checksum of the file

current_checksum=$(sha256sum $file)

#Get the previous checksum of the file

previous_checksum=$(cat $CHECKSUM_DIR/$basename.sha256)

#Compare current and previous checksums

if [ "$current_checksum" != "$previous_checksum" ]; then

#Generate Log

message="$(date "+%Y-%m-%d %H:%M:%S") - The file $basename has been modified. CHANGES: "

message+=$(diff --unified $file <(cat $CHECKSUM_DIR/$basename.sha256) | grep -v "sha256sum")

echo -e $message

#Update the checksum file with the new checksum

echo "$current_checksum $basename" > $CHECKSUM_DIR/$basename.sha256

fi

done

This bash script monitors the integrity of files and directories by calculating and comparing their checksums.

It uses the diff --unified command to compare the current file to its previous version, and appends the differences to a Log message.

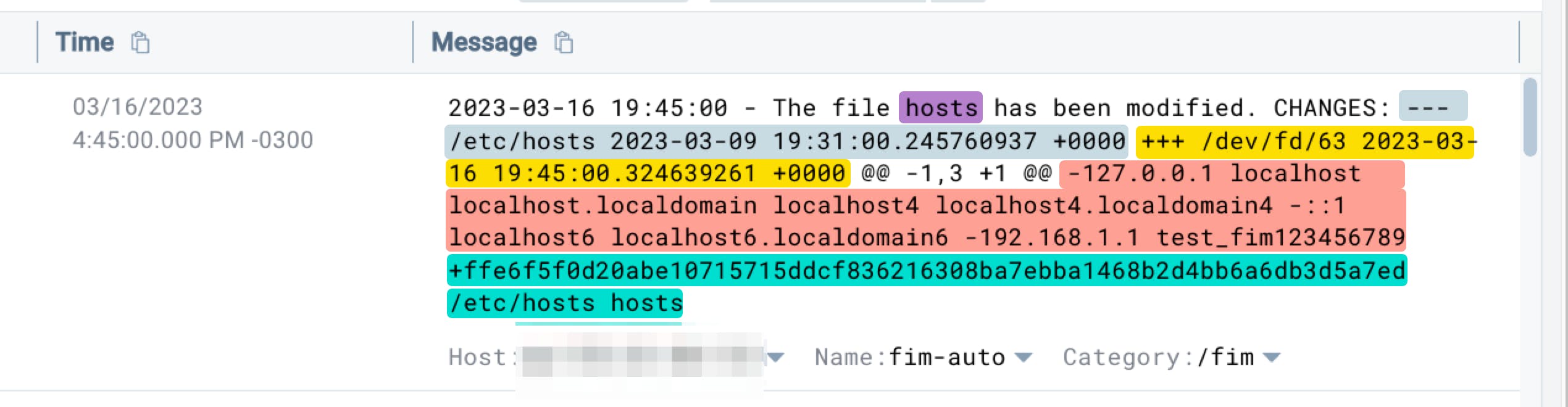

Log Example:2023-03-16 19:45:00 - The file hosts have been modified. CHANGES: --- /etc/hosts 2023-03-09 19:31:00.245760937 +0000 +++ /dev/fd/63 2023-03-16 19:45:00.324639261 +0000 @@ -1,3 +1 @@ -127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 -::1 localhost6 localhost6.localdomain6 -192.168.1.1 test_fim123456789 +ffe6gf0d20abe99999999ddcf999216308ba7ebga1999b2d4bb6a6bd3d5a7ed /etc/hosts hosts

NOTE: The previous bash script is already embedded within another Python script in the following steps below to ease deployment.

Log Explanation:

This log message shows that the hosts file has modifications. It also shows the changes that were made, for instance:

It shows the difference between the original file data /etc/hosts (from March 9, 2023) and the modified data at the time of the log message.

The text starting with "---" shows the original data, and the text starting with "+++" shows the modified data.

Also, text with a "-" symbol indicates removed/modified data, and text with a "+" symbol indicates added data.

The text between "@@" means that changes start on line 1 and end on line 3.

(1,3)

In this case, the change was made to modify the following: 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost6 localhost6.localdomain6 192.168.1.1 test_fim123456789

The new line contains the SHA256 checksum of the modified file: ffe6f5f0d20abe10715715ddcf836216308ba7ebba1468b2d4bb6a6db3d5a7ed /etc/hosts hosts

Functionality

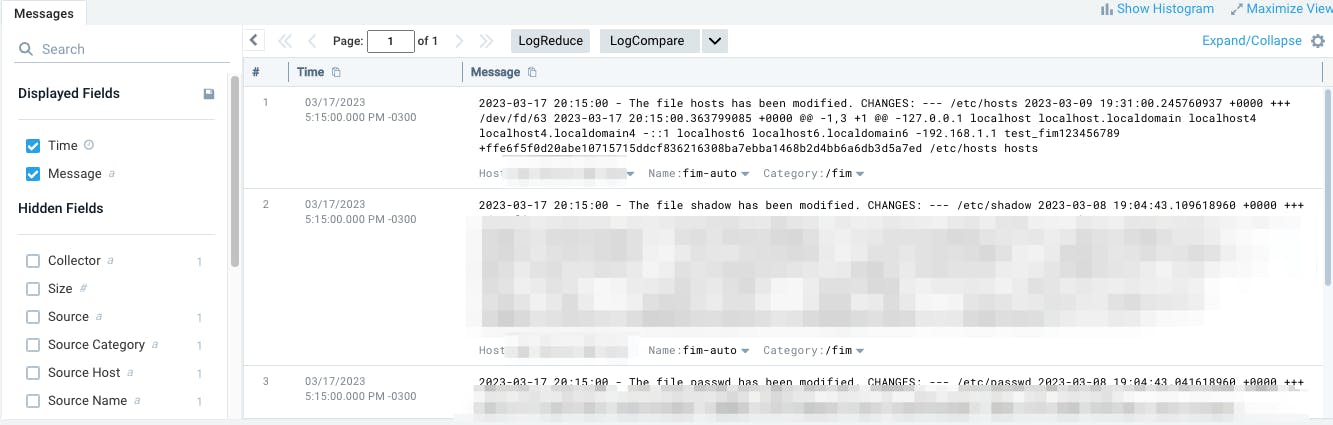

If you're monitoring multiple machines, make sure that every Script Source is collecting data using the same _sourceCategory tag. To deploy the Script Source into every host I've developed a Python script to do so in bulk:

import requests

import os

# Creates a Script Source based on payload

def createScriptSource(headers, collectorID, access_id, access_key):

session = requests.session()

data = {

"source": {

"name": "fim-auto-1",

"category": "/fim",

"description": "",

"sourceType": "Script",

"messagePerRequest": "true",

"commands":["/bin/bash"],

"script": "#!/bin/bash\n\n#List of files and directories to monitor\nFILES=(/etc/passwd /etc/shadow /etc/hosts)\n\n#Location to store checksums\nCHECKSUM_DIR=/var/log/fim\n\n#Create a directory for checksums if not exists\nif [ ! -d $CHECKSUM_DIR ]; then\n mkdir -p $CHECKSUM_DIR\nfi\n\n#Loop through files and directories and calculate checksums\nfor file in \"${FILES[@]}\"\n do\n #Get the file basename\n basename=$(basename $file)\n #Calculate the checksum of the file\n checksum=$(sha256sum $file)\n #Create a file containing the checksum for the file\n echo \"$checksum $basename\" > $CHECKSUM_DIR/$basename.sha256\ndone\n\n#Compare current checksums to previous checksums\nfor file in \"${FILES[@]}\"\n do\n #Get the file basename\n basename=$(basename $file)\n #Calculate the current checksum of the file\n current_checksum=$(sha256sum $file)\n #Get the previous checksum of the file\n previous_checksum=$(cat $CHECKSUM_DIR/$basename.sha256)\n #Compare current and previous checksums\n if [ \"$current_checksum\" != \"$previous_checksum\" ]; then\n #Generate Log\n message=\"$(date \"+%Y-%m-%d %H:%M:%S\") - AUTO - The file $basename has been modified. CHANGES: \"\n message+=$(diff $file <(cat $CHECKSUM_DIR/$basename.sha256) | grep -v \"sha256sum\")\n echo -e $message\n\n #Update the checksum file with the new checksum\n echo \"$current_checksum $basename\" > $CHECKSUM_DIR/$basename.sha256\n fi\ndone",

"cronExpression":"0 0/15 * 1/1 * ? *"

}

}

response = session.post(f'<YOUR_SUMOLOGIC_ENDPOINT>/api/v1/collectors/{collectorID}/sources',

headers=headers, json=data, auth=(access_id, access_key))

print(response.status_code, response.text)

# Set Header

headers = {'Content-Type': 'application/json'}

# Set Sumo Logic API credentials

access_id = "<YOUR_ACCESS_ID>"

access_key = "<YOUR_ACCESS_KEY>"

# Get Sumo Collector IDs

response = requests.get('<YOUR_SUMOLOGIC_ENDPOINT>/api/v1/collectors', headers=headers, auth=(access_id, access_key))

# Iterates through each Collector ID calling "createScriptSource" to create a Source for each Sumo Collector

for col in response.json()["collectors"]:

collID = col["id"]

createScriptSource(headers, collID, access_id, access_key)

The Python script performs the following tasks:

Sends a request to get the Sumo Collector IDs

Iterates through each Collector ID calling the

createScriptSourcefunction with the necessary parameters to create a Source for each Sumo CollectorThe function creates a new Script Source in every Sumo Logic Collector using the information provided in the payload

Conclusion

The Python code can be used to automate the process of creating a Script Source in the Sumo Logic platform to monitor files and directories for changes.

By setting a cron expression, the script can be executed at regular intervals to ensure that the files and directories are continuously monitored.

Now, you know! 😉